library(DBI)

library(tidyverse)

library(dbplyr)

library(knitr)3 Time Series Analysis

Chapter 3 of Tanimura (2021) is really two chapters. The first part of the chapter discusses some finer details of dates, date-times, and time stamps. This material is important, but a little technical and bewildering without some concrete use cases. This complexity is compounded by the use of DuckDB here and PostgreSQL in Tanimura (2021), as some details work a little differently from one backend to the other. Finally, while there are some interesting issues in using dbplyr with timestamp data, there probably need data and settings to fully comprehend. As such, I have put the code related to this part of Chapter 3 of Tanimura (2021) in Section 1, part of the appendix.

The second part of the chapter is where Tanimura (2021) really starts to take off. This is where it starts playing around with real data.

3.1 The Retail Sales Data Set

We read the data

db <- dbConnect(duckdb::duckdb())

retail_sales <-

tbl(db, "read_csv_auto('data/us_retail_sales.csv')") |>

compute(name = "retail_sales")Now that we have the data in R, a few questions arise.

First, why would we move it to a database? Second, how can we move it to a database? Related to the previous question will be: which database to we want to move it to?

Taking these questions in turn, the first one is a good question. With retail_sales as a local data frame, we can run almost all the analyses below with only the slightest modifications. The modifications needed are to replace every instance of window_order() with arrange().1 The “almost all” relates to the moving average, which relies on window_frame() from dbplyr, which has no exact equivalent in dplyr.

So the first point is that “almost all” implies an advantage for using dbplyr. On occasion, the SQL engine provided by PostgreSQL will allow us to do some data manipulations more easily than we can in R. But of course, there are many cases when the opposite is true. That said, why not have both? Store data in a database and collect() as necessary to do things that R is better at?

Second, performance can be better using SQL. Compiling a version of this chapter using dplyr with a local data frame for the remainder took just under 14 seconds. Using a PostgreSQL backend, it took 8.5 seconds. Adding back in the queries with window_order() that I removed so that I could compile with dplyr and using DuckDB as the backend, the document took 8.3 seconds to compile (this beat out PostgreSQL doing the same in 9.6 seconds). While these differences are not practically very significant, they could be with more demanding tasks. Also, a database will not always beat R or Python for performance, but often will and having the option to use a database backend is a good thing.

Third, having the data in a database allows you to interrogate the data using SQL. If you are more familiar with SQL, or just know how to do a particular task in SQL, this can be beneficial.2

Fourth, I think there is merit in separating the tasks of acquiring and cleaning data from the task of analysing those data. Many data analysts have a work flow that entails ingesting and cleaning data for each analysis task. My experience is that it is often better to do the ingesting and cleaning once and then reuse the cleaned data in subsequent analyses. A common pattern involves reusing the same data in many analyses and it can be helpful to divide the tasks in a way that using an SQL database encourages. Also the skills in ingesting and cleaning data can be different from those for analysing those data, so sometimes it makes sense for one person to do one task, push the data to a database, and then have someone else do some or all of the analysis.

Regarding the second question, there are a few options. But for this chapter we will use duckdb

To install DuckDB, all we have to do is install.packages("duckdb").

Then we can create a connection as follows.

Here we use the default of an in-memory database. At the end of this chapter, we discuss how we could store the data in a file (say, legislators.duckdb) if we want persistent storage.

3.2 Trending the Data

3.2.1 Simple Trends

SELECT sales_month, sales

FROM retail_sales

WHERE kind_of_business = 'Retail and food services sales, total'

ORDER BY 1| sales_month | sales |

|---|---|

| 1992-01-01 | 146376 |

| 1992-02-01 | 147079 |

| 1992-03-01 | 159336 |

| 1992-04-01 | 163669 |

| 1992-05-01 | 170068 |

| 1992-06-01 | 168663 |

| 1992-07-01 | 169890 |

| 1992-08-01 | 170364 |

| 1992-09-01 | 164617 |

| 1992-10-01 | 173655 |

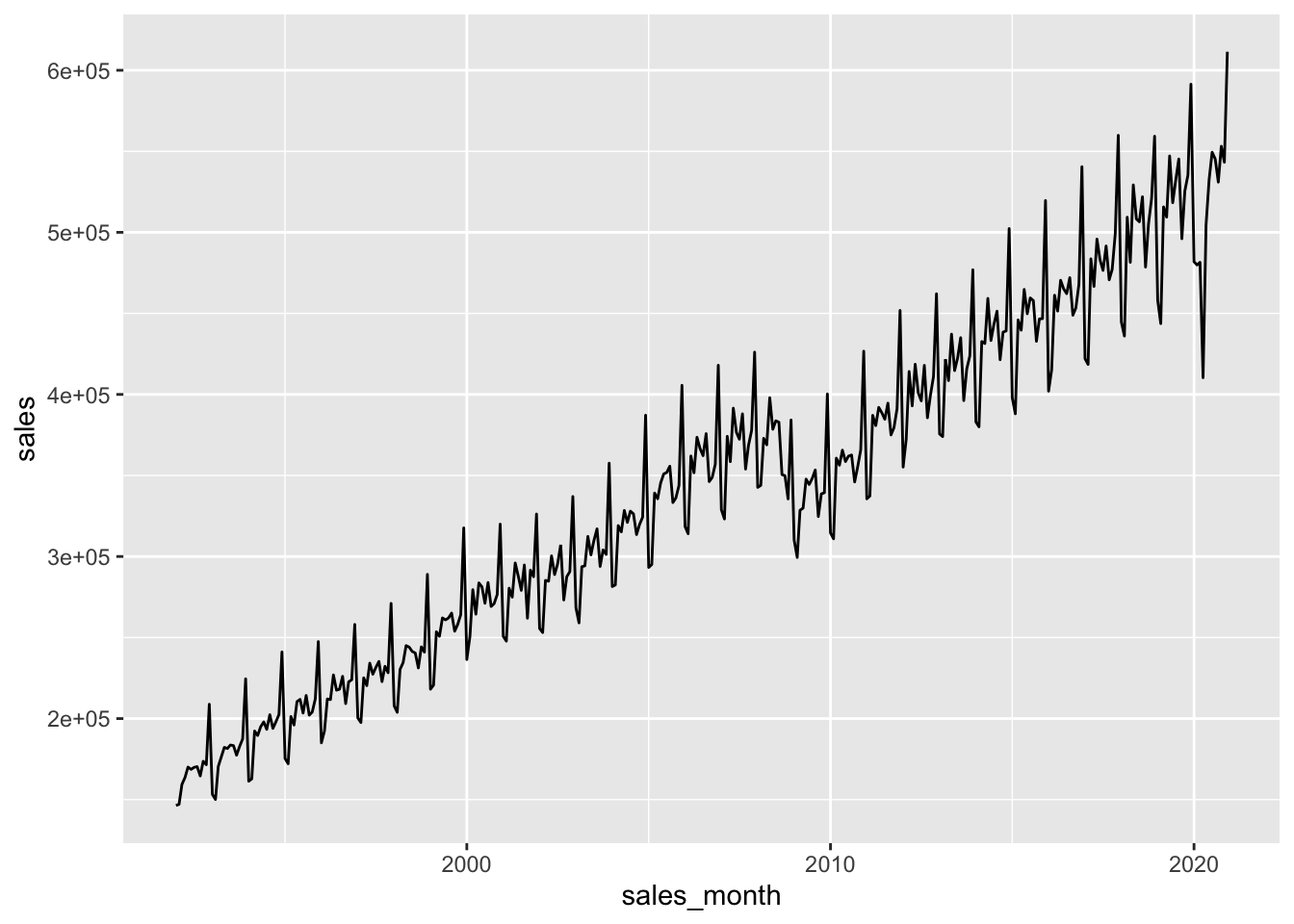

retail_sales |>

filter(kind_of_business == 'Retail and food services sales, total') |>

select(sales_month, sales) |>

ggplot(aes(x = sales_month, y = sales)) +

geom_line()

SELECT date_part('year',sales_month) as sales_year,

sum(sales) as sales

FROM retail_sales

WHERE kind_of_business = 'Retail and food services sales, total'

GROUP BY 1

;| sales_year | sales |

|---|---|

| 1992 | 2014102 |

| 1993 | 2153095 |

| 1994 | 2330235 |

| 1995 | 2450628 |

| 1996 | 2603794 |

| 1997 | 2726131 |

| 1998 | 2852956 |

| 1999 | 3086990 |

| 2000 | 3287537 |

| 2001 | 3378906 |

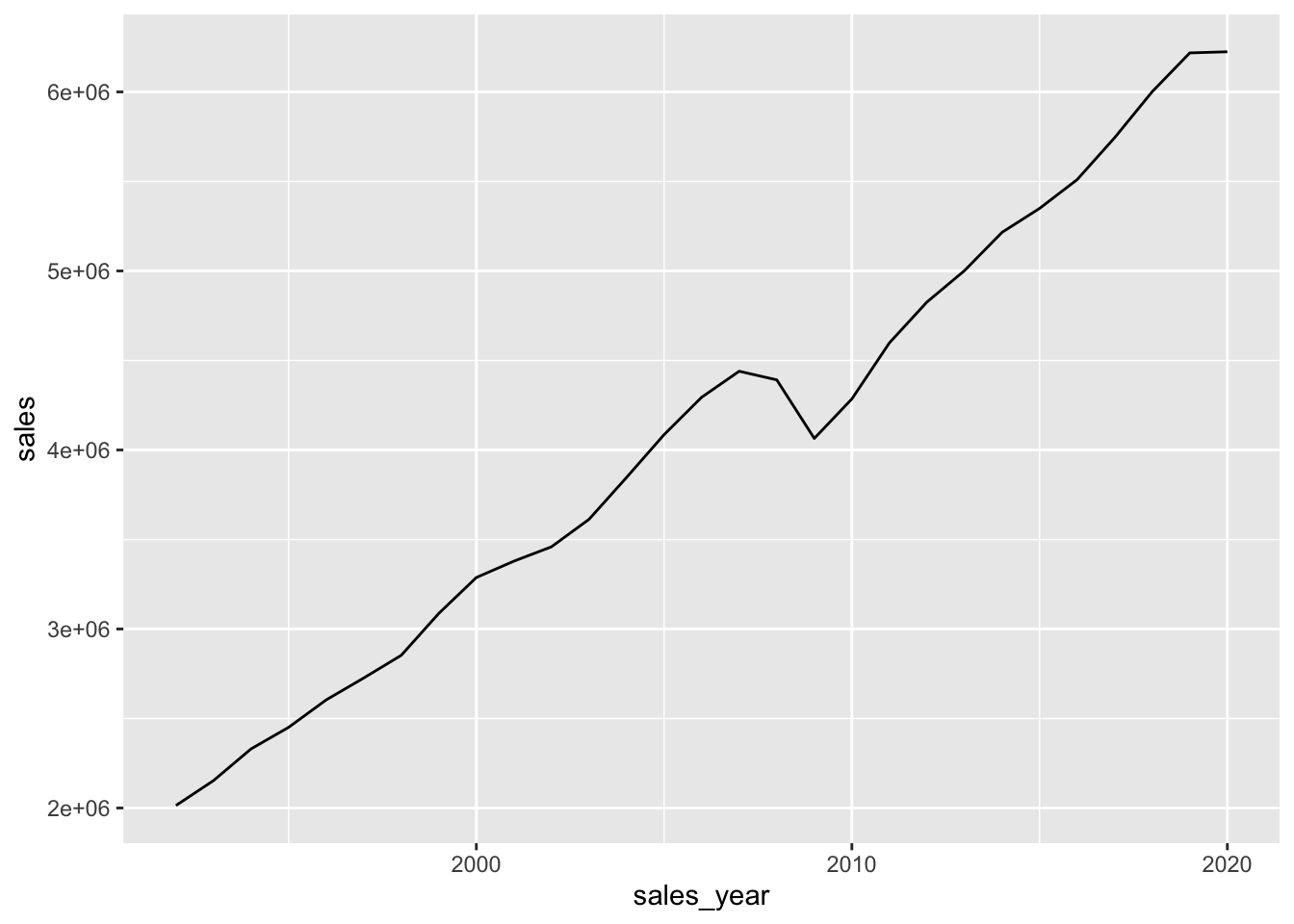

retail_sales |>

filter(kind_of_business == 'Retail and food services sales, total') |>

mutate(sales_year = year(sales_month)) |>

group_by(sales_year) |>

summarize(sales = sum(sales, na.rm = TRUE)) |>

ggplot(aes(x = sales_year, y = sales)) +

geom_line()

SELECT date_part('year',sales_month) as sales_year,

kind_of_business, sum(sales) as sales

FROM retail_sales

WHERE kind_of_business IN

('Book stores',

'Sporting goods stores',

'Hobby, toy, and game stores')

GROUP BY 1,2

ORDER BY 1;| sales_year | kind_of_business | sales |

|---|---|---|

| 1992 | Book stores | 8327 |

| 1992 | Sporting goods stores | 15583 |

| 1992 | Hobby, toy, and game stores | 11251 |

| 1993 | Book stores | 9108 |

| 1993 | Sporting goods stores | 16791 |

| 1993 | Hobby, toy, and game stores | 11651 |

| 1994 | Book stores | 10107 |

| 1994 | Sporting goods stores | 18825 |

| 1994 | Hobby, toy, and game stores | 12850 |

| 1995 | Book stores | 11196 |

3.2.2 Comparing Components

retail_sales |>

filter(kind_of_business %in%

c('Book stores',

'Sporting goods stores',

'Hobby, toy, and game stores')) |>

mutate(sales_year = year(sales_month)) |>

group_by(sales_year, kind_of_business) |>

summarize(sales = sum(sales, na.rm = TRUE), .groups = "drop") |>

ggplot(aes(x = sales_year, y = sales, color = kind_of_business)) +

geom_line() +

theme(legend.position = "top")

SELECT sales_month, kind_of_business, sales

FROM retail_sales

WHERE kind_of_business IN ('Men''s clothing stores','Women''s clothing stores')

ORDER BY 1,2;| sales_month | kind_of_business | sales |

|---|---|---|

| 1992-01-01 | Men’s clothing stores | 701 |

| 1992-01-01 | Women’s clothing stores | 1873 |

| 1992-02-01 | Men’s clothing stores | 658 |

| 1992-02-01 | Women’s clothing stores | 1991 |

| 1992-03-01 | Men’s clothing stores | 731 |

| 1992-03-01 | Women’s clothing stores | 2403 |

| 1992-04-01 | Men’s clothing stores | 816 |

| 1992-04-01 | Women’s clothing stores | 2665 |

| 1992-05-01 | Men’s clothing stores | 856 |

| 1992-05-01 | Women’s clothing stores | 2752 |

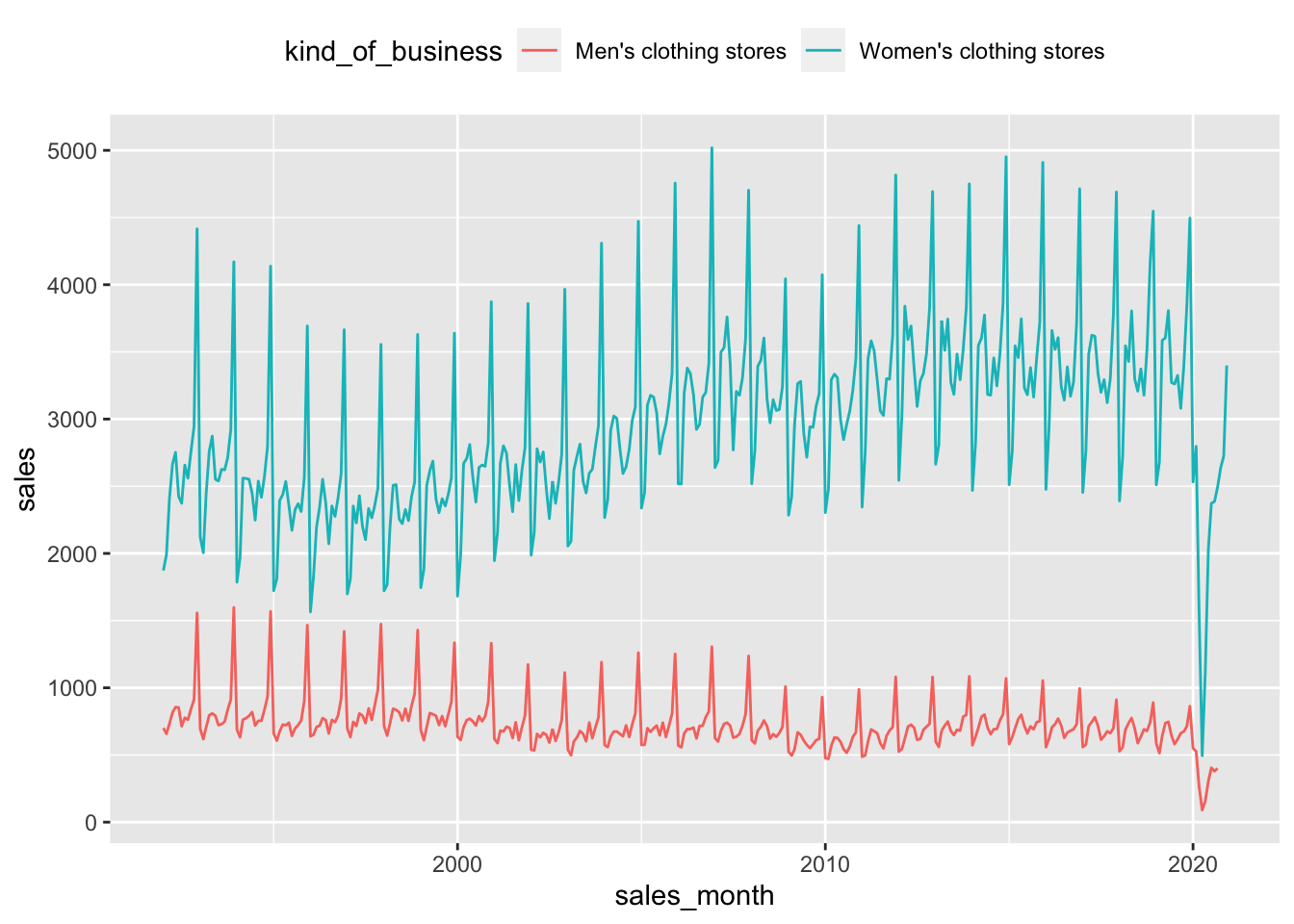

retail_sales |>

filter(kind_of_business %in% c("Men's clothing stores",

"Women's clothing stores")) |>

select(sales_month, kind_of_business, sales) |>

ggplot(aes(x = sales_month, y = sales, color = kind_of_business)) +

geom_line() +

theme(legend.position = "top")

SELECT date_part('year',sales_month) as sales_year,

kind_of_business, sum(sales) as sales

FROM retail_sales

WHERE kind_of_business IN

('Men''s clothing stores',

'Women''s clothing stores')

GROUP BY 1, 2

ORDER BY 1, 2;| sales_year | kind_of_business | sales |

|---|---|---|

| 1992 | Men’s clothing stores | 10179 |

| 1992 | Women’s clothing stores | 31815 |

| 1993 | Men’s clothing stores | 9962 |

| 1993 | Women’s clothing stores | 32350 |

| 1994 | Men’s clothing stores | 10032 |

| 1994 | Women’s clothing stores | 30585 |

| 1995 | Men’s clothing stores | 9315 |

| 1995 | Women’s clothing stores | 28696 |

| 1996 | Men’s clothing stores | 9546 |

| 1996 | Women’s clothing stores | 28238 |

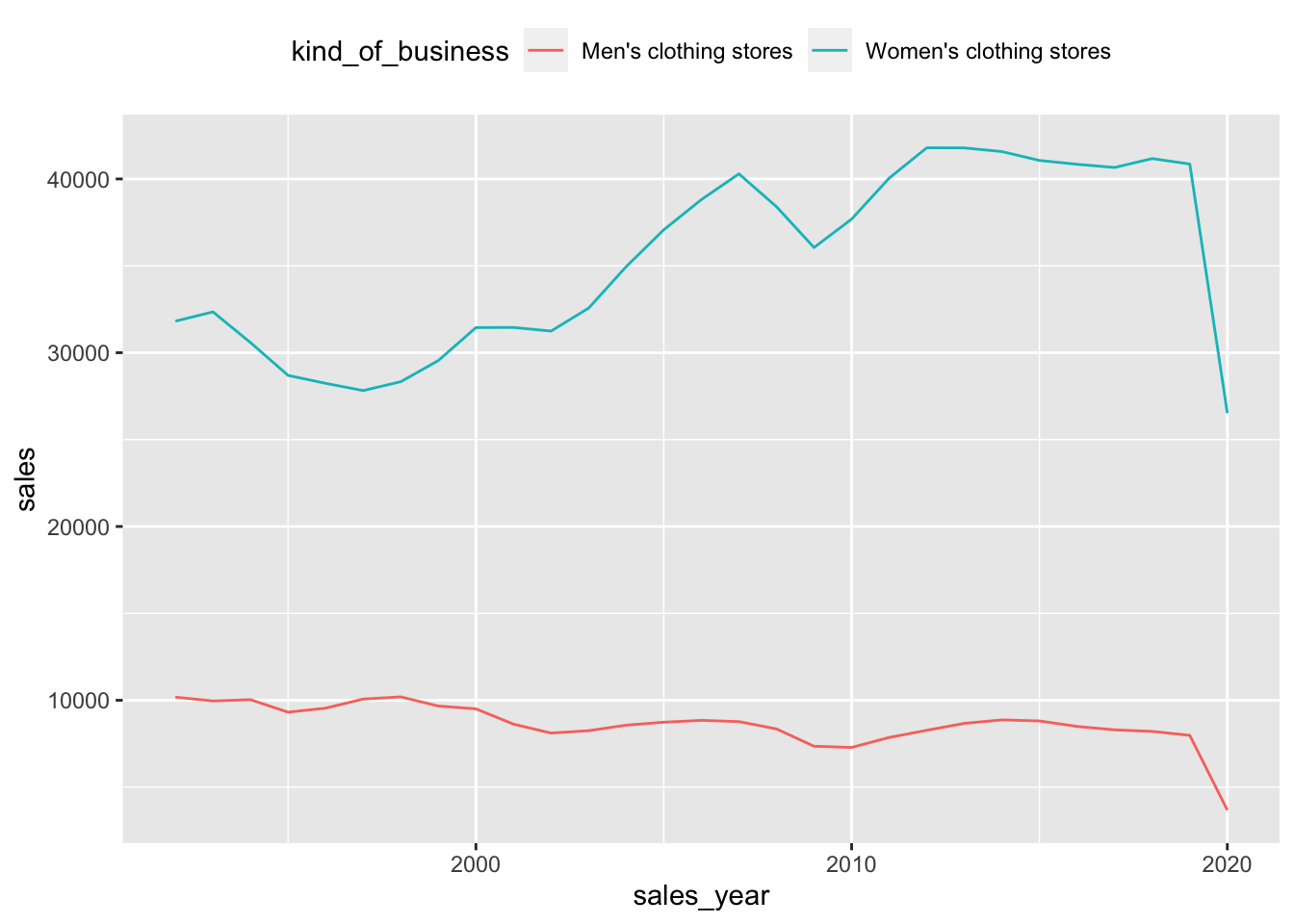

retail_sales |>

filter(kind_of_business %in%

c("Men's clothing stores",

"Women's clothing stores")) |>

mutate(sales_year = year(sales_month)) |>

group_by(sales_year, kind_of_business) |>

summarize(sales = sum(sales, na.rm = TRUE), .groups = "drop") |>

ggplot(aes(x = sales_year, y = sales, color = kind_of_business)) +

geom_line() +

theme(legend.position = "top")

SELECT date_part('year', sales_month) AS sales_year,

sum(CASE WHEN kind_of_business = 'Women''s clothing stores'

then sales

END) AS womens_sales,

sum(CASE WHEN kind_of_business = 'Men''s clothing stores'

then sales

END) AS mens_sales

FROM retail_sales

WHERE kind_of_business IN

('Men''s clothing stores',

'Women''s clothing stores')

GROUP BY 1

ORDER BY 1;| sales_year | womens_sales | mens_sales |

|---|---|---|

| 1992 | 31815 | 10179 |

| 1993 | 32350 | 9962 |

| 1994 | 30585 | 10032 |

| 1995 | 28696 | 9315 |

| 1996 | 28238 | 9546 |

| 1997 | 27822 | 10069 |

| 1998 | 28332 | 10196 |

| 1999 | 29549 | 9667 |

| 2000 | 31447 | 9507 |

| 2001 | 31453 | 8625 |

pivoted_sales <-

retail_sales |>

filter(kind_of_business %in%

c("Men's clothing stores",

"Women's clothing stores")) |>

mutate(kind_of_business = if_else(kind_of_business == "Women's clothing stores",

"womens", "mens"),

sales_year = year(sales_month)) |>

group_by(sales_year, kind_of_business) |>

summarize(sales = sum(sales, na.rm = TRUE), .groups = "drop") |>

pivot_wider(id_cols = "sales_year",

names_from = "kind_of_business",

names_glue = "{kind_of_business}_{.value}",

values_from = "sales")

pivoted_sales |>

show_query()<SQL>

SELECT

sales_year,

MAX(CASE WHEN (kind_of_business = 'mens') THEN sales END) AS mens_sales,

MAX(CASE WHEN (kind_of_business = 'womens') THEN sales END) AS womens_sales

FROM (

SELECT sales_year, kind_of_business, SUM(sales) AS sales

FROM (

SELECT

sales_month,

naics_code,

CASE WHEN (kind_of_business = 'Women''s clothing stores') THEN 'womens' WHEN NOT (kind_of_business = 'Women''s clothing stores') THEN 'mens' END AS kind_of_business,

reason_for_null,

sales,

EXTRACT(year FROM sales_month) AS sales_year

FROM retail_sales

WHERE (kind_of_business IN ('Men''s clothing stores', 'Women''s clothing stores'))

) q01

GROUP BY sales_year, kind_of_business

) q02

GROUP BY sales_yearpivoted_sales |>

arrange(sales_year) |>

collect(n = 10) |>

kable()| sales_year | mens_sales | womens_sales |

|---|---|---|

| 1992 | 10179 | 31815 |

| 1993 | 9962 | 32350 |

| 1994 | 10032 | 30585 |

| 1995 | 9315 | 28696 |

| 1996 | 9546 | 28238 |

| 1997 | 10069 | 27822 |

| 1998 | 10196 | 28332 |

| 1999 | 9667 | 29549 |

| 2000 | 9507 | 31447 |

| 2001 | 8625 | 31453 |

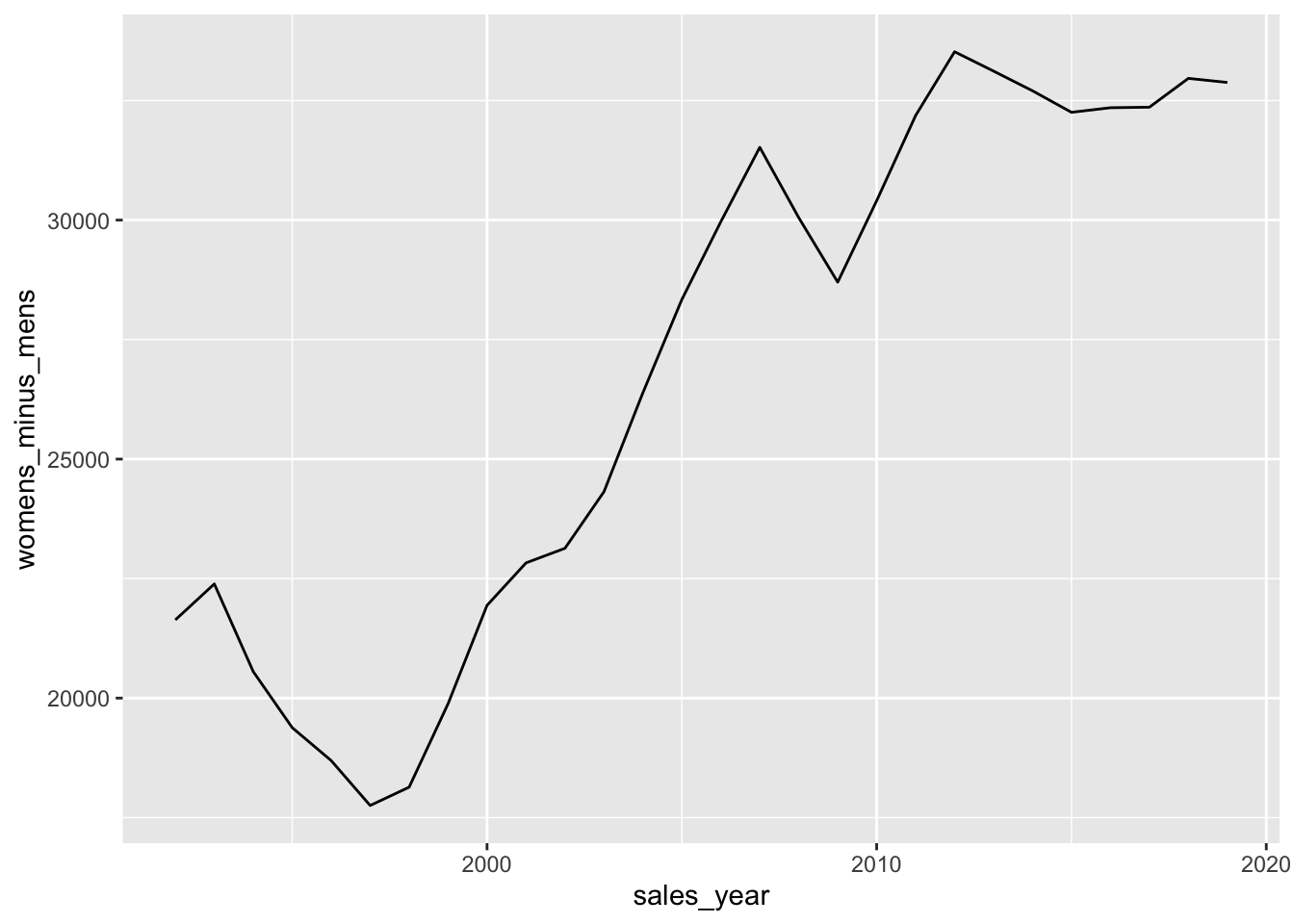

pivoted_sales |>

filter(sales_year <= 2019) |>

group_by(sales_year) |>

mutate(womens_minus_mens = womens_sales - mens_sales,

mens_minus_womens = mens_sales - womens_sales) |>

select(sales_year, womens_minus_mens, mens_minus_womens) |>

ggplot(aes(y = womens_minus_mens, x = sales_year)) +

geom_line()

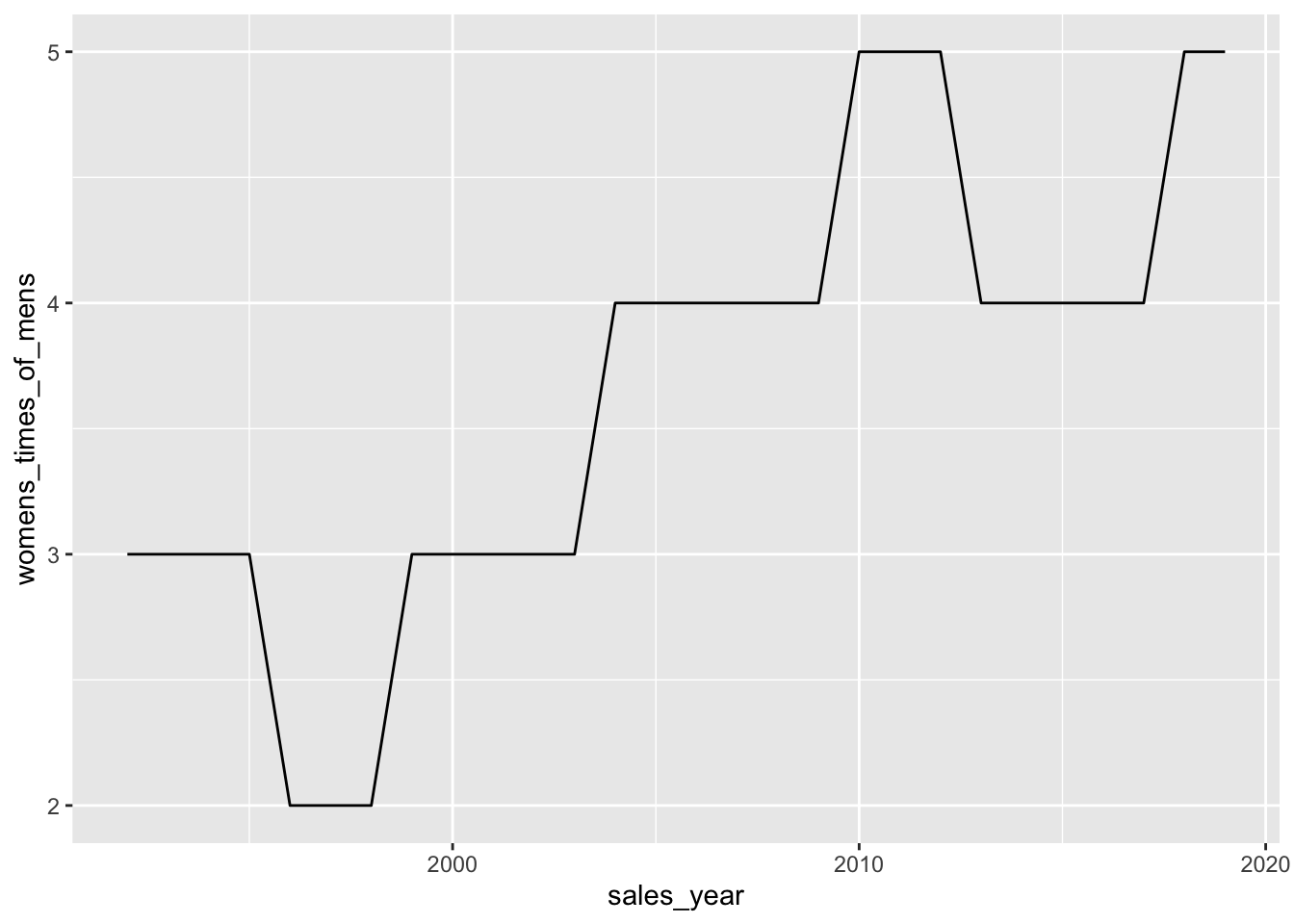

pivoted_sales |>

filter(sales_year <= 2019) |>

group_by(sales_year) |>

mutate(womens_times_of_mens = womens_sales / mens_sales) |>

ggplot(aes(y = womens_times_of_mens, x = sales_year)) +

geom_line()

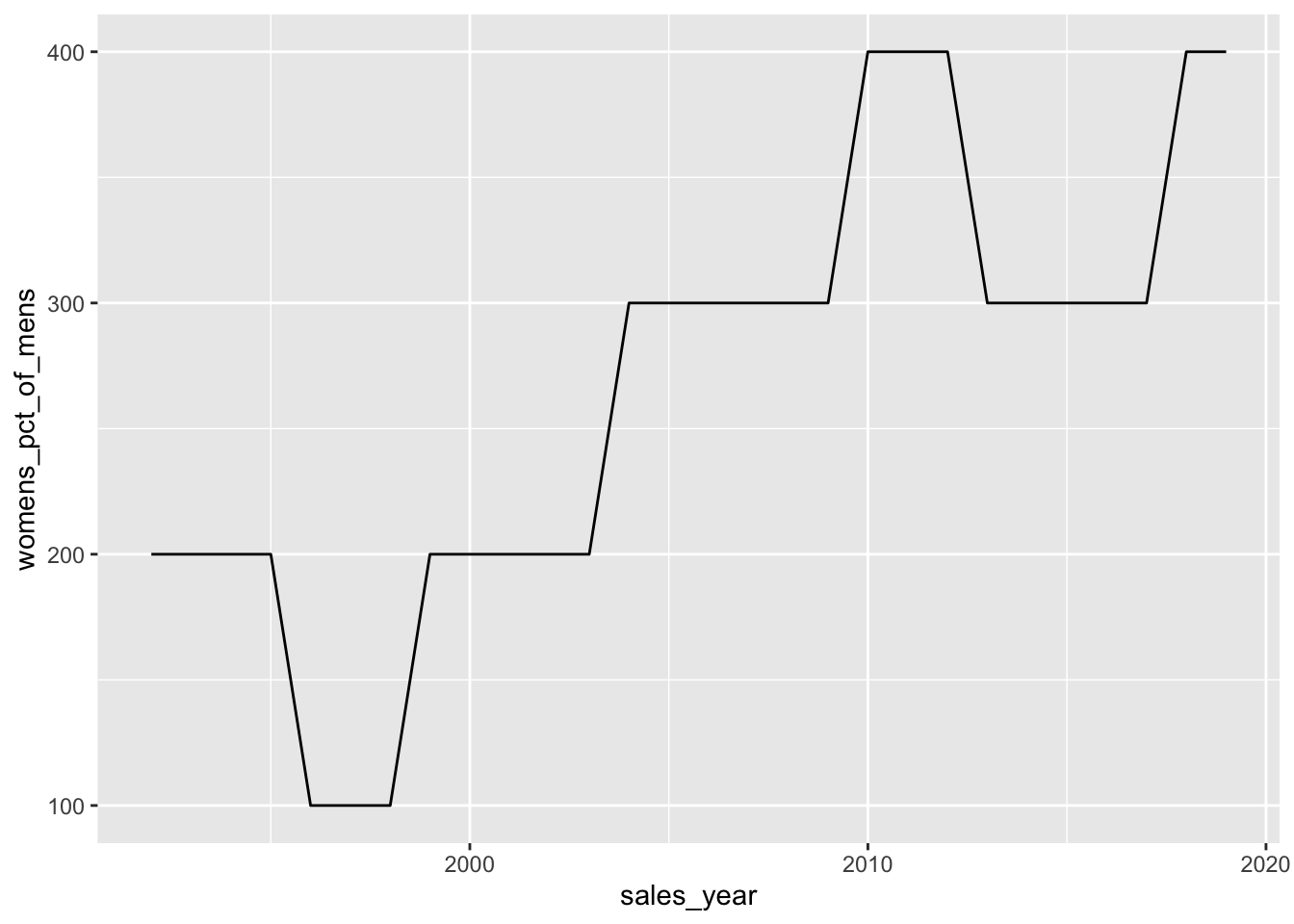

pivoted_sales |>

filter(sales_year <= 2019) |>

group_by(sales_year) |>

mutate(womens_pct_of_mens = (womens_sales / mens_sales - 1) * 100) |>

ggplot(aes(y = womens_pct_of_mens, x = sales_year)) +

geom_line()

3.2.3 Percent of Total Calculations

retail_sales |>

filter(kind_of_business %in%

c("Men's clothing stores",

"Women's clothing stores")) |>

group_by(sales_month) |>

mutate(total_sales = sum(sales, na.rm = TRUE)) |>

ungroup() |>

mutate(pct_total_sales = sales * 100 / total_sales) |>

select(sales_month, kind_of_business, pct_total_sales) |>

collect(n = 3) |>

kable()| sales_month | kind_of_business | pct_total_sales |

|---|---|---|

| 1992-06-01 | Men’s clothing stores | 26.02991 |

| 1992-06-01 | Women’s clothing stores | 73.97009 |

| 1992-08-01 | Men’s clothing stores | 22.62667 |

retail_sales |>

filter(kind_of_business %in%

c("Men's clothing stores",

"Women's clothing stores")) |>

group_by(sales_month) |>

mutate(total_sales = sum(sales, na.rm = TRUE)) |>

ungroup() |>

mutate(pct_total_sales = sales * 100 / total_sales) |>

show_query()<SQL>

SELECT *, (sales * 100.0) / total_sales AS pct_total_sales

FROM (

SELECT *, SUM(sales) OVER (PARTITION BY sales_month) AS total_sales

FROM retail_sales

WHERE (kind_of_business IN ('Men''s clothing stores', 'Women''s clothing stores'))

) q01retail_sales |>

filter(kind_of_business %in%

c("Men's clothing stores",

"Women's clothing stores")) |>

group_by(sales_month) |>

mutate(total_sales = sum(sales, na.rm = TRUE)) |>

ungroup() |>

mutate(pct_total_sales = sales * 100 / total_sales) |>

ggplot(aes(y = pct_total_sales, x = sales_month, color = kind_of_business)) +

geom_line()

3.2.4 Indexing to See Percent Change over Time

retail_sales |>

filter(kind_of_business == "Women's clothing stores") |>

mutate(sales_year = year(sales_month)) |>

group_by(sales_year) |>

summarize(sales = sum(sales, na.rm = TRUE)) |>

ungroup() |>

window_order(sales_year) |>

mutate(index_sales = first(sales),

pct_from_index = (sales/index_sales - 1) * 100) |>

collect(n = 3) |>

kable()| sales_year | sales | index_sales | pct_from_index |

|---|---|---|---|

| 1992 | 31815 | 31815 | 0 |

| 1993 | 32350 | 31815 | 0 |

| 1994 | 30585 | 31815 | -100 |

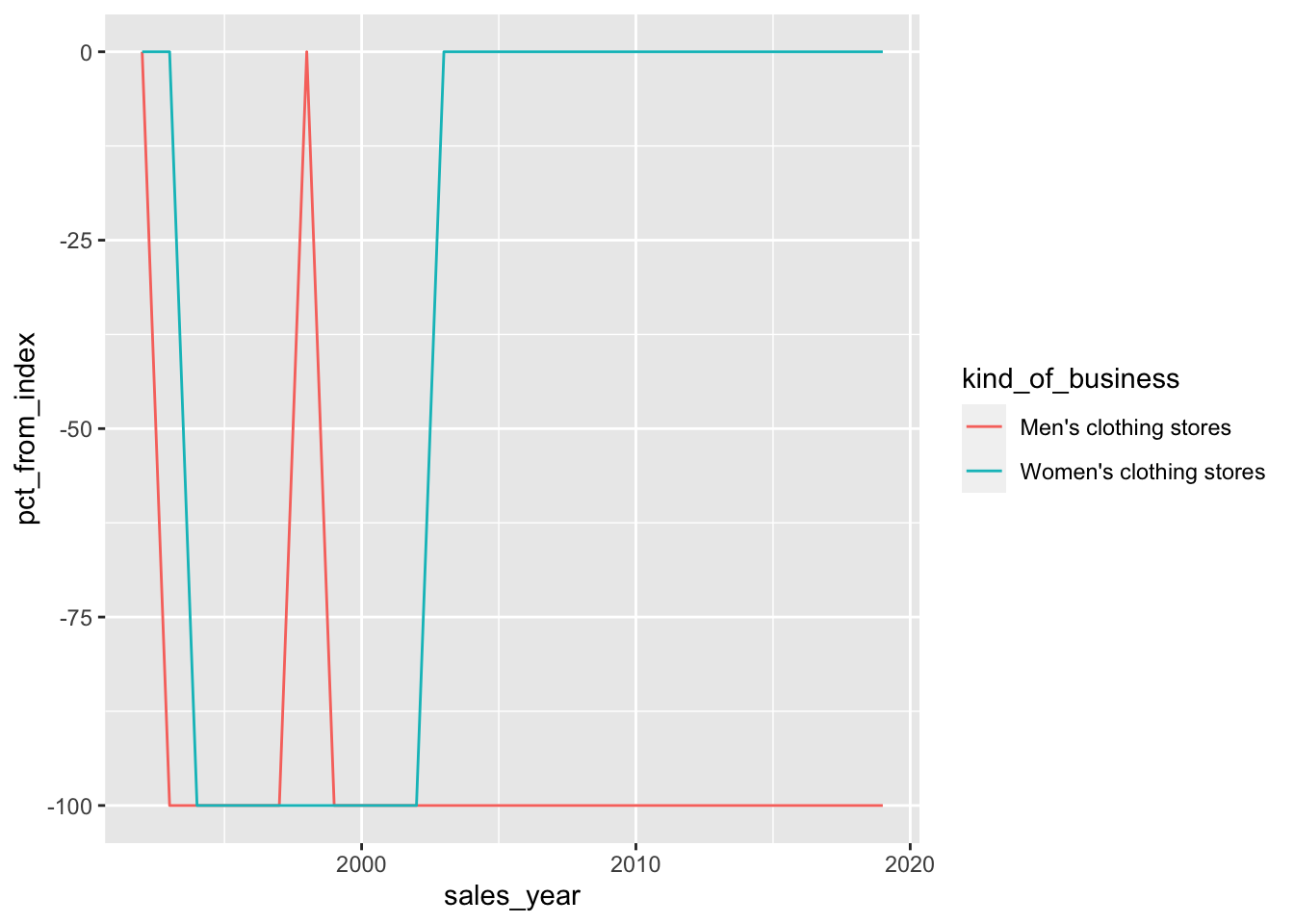

retail_sales |>

filter(kind_of_business %in% c("Women's clothing stores",

"Men's clothing stores"),

sales_month <= '2019-12-31') |>

mutate(sales_year = year(sales_month)) |>

group_by(kind_of_business, sales_year) |>

summarize(sales = sum(sales, na.rm = TRUE), .groups = "drop") |>

group_by(kind_of_business) |>

window_order(sales_year) |>

mutate(index_sales = first(sales),

pct_from_index = (sales/index_sales - 1) * 100) |>

ungroup() |>

ggplot(aes(y = pct_from_index, x = sales_year, color = kind_of_business)) +

geom_line()

3.3 Rolling Time Windows

3.3.1 Calculating Rolling Time Windows

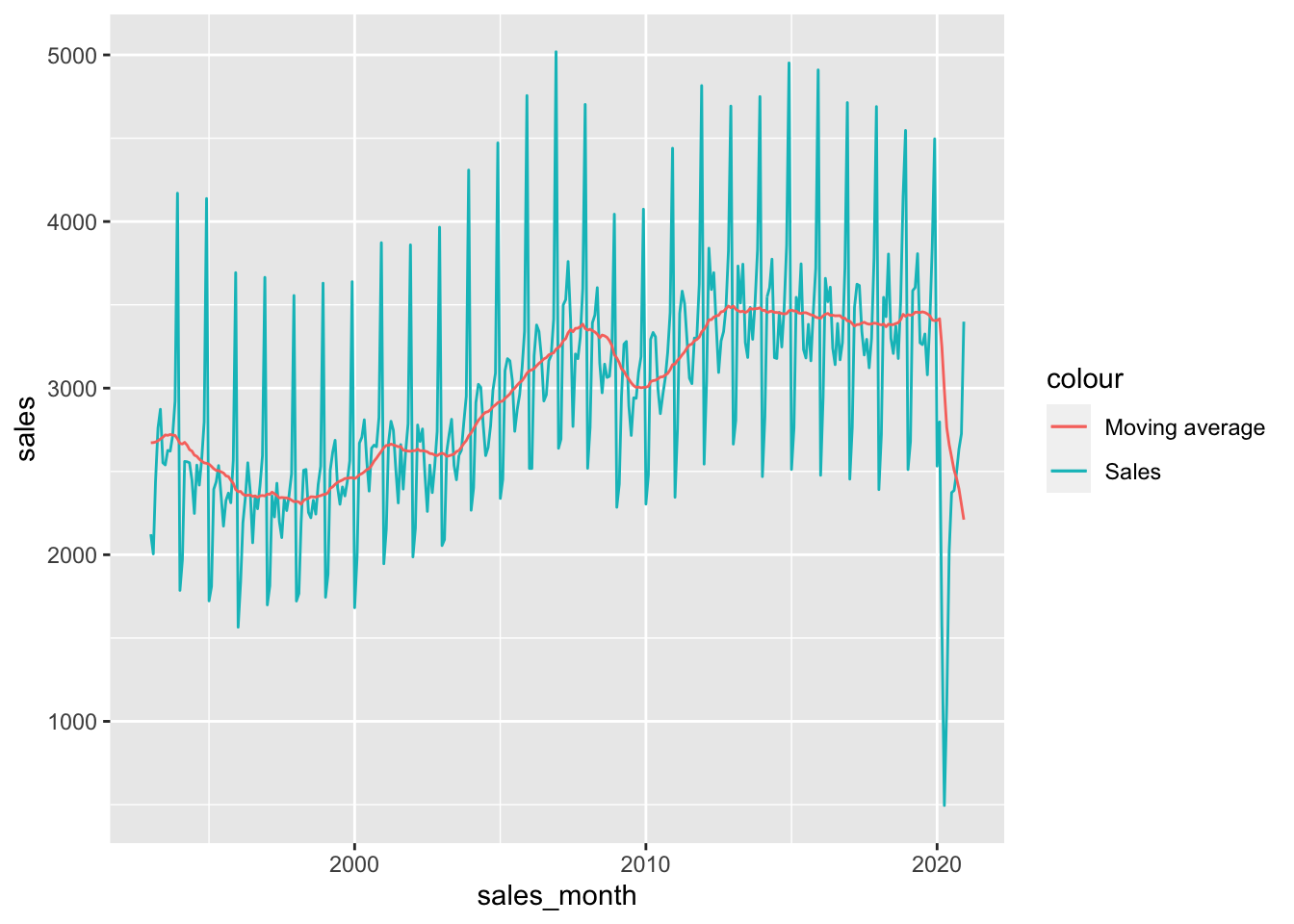

mvg_avg <-

retail_sales |>

filter(kind_of_business == "Women's clothing stores") |>

window_order(sales_month) |>

window_frame(-11, 0) |>

mutate(moving_avg = mean(sales, na.rm = TRUE),

records_count = n()) |>

filter(sales_month >= '1993-01-01')

mvg_avg |>

select(sales_month, moving_avg, records_count) |>

collect(n = 3) |>

kable(digits = 2)| sales_month | moving_avg | records_count |

|---|---|---|

| 1993-01-01 | 2672.08 | 12 |

| 1993-02-01 | 2673.25 | 12 |

| 1993-03-01 | 2676.50 | 12 |

mvg_avg |>

ggplot(aes(x = sales_month)) +

geom_line(aes(y = sales, colour = "Sales")) +

geom_line(aes(y = moving_avg, colour = "Moving average"))

SELECT

sales_month,

avg(sales) over w AS moving_avg,

count(sales) over w AS records_count

FROM retail_sales

WHERE kind_of_business = 'Women''s clothing stores'

WINDOW w AS (order by sales_month

rows between 11 preceding and current row)| sales_month | moving_avg | records_count |

|---|---|---|

| 1992-01-01 | 1873.000 | 1 |

| 1992-02-01 | 1932.000 | 2 |

| 1992-03-01 | 2089.000 | 3 |

| 1992-04-01 | 2233.000 | 4 |

| 1992-05-01 | 2336.800 | 5 |

| 1992-06-01 | 2351.333 | 6 |

| 1992-07-01 | 2354.429 | 7 |

| 1992-08-01 | 2392.250 | 8 |

| 1992-09-01 | 2410.889 | 9 |

| 1992-10-01 | 2445.300 | 10 |

retail_sales |>

filter(kind_of_business == "Women's clothing stores") |>

window_order(sales_month) |>

window_frame(-11, 0) |>

mutate(moving_avg = mean(sales, na.rm = TRUE),

records_count = n()) |>

select(sales_month, moving_avg, records_count) |>

collect(n = 10) |>

kable()date_dim <-

tibble(date = seq(as.Date('1993-01-01'),

as.Date('2020-12-01'),

by = "1 month")) |>

copy_to(db, df = _, overwrite = TRUE, name = "date_dim")WITH jan_jul AS (

SELECT sales_month, sales

FROM retail_sales

WHERE kind_of_business = 'Women''s clothing stores'

AND date_part('month', sales_month) IN (1, 7))

SELECT a.date, b.sales_month, b.sales

FROM date_dim a

INNER JOIN jan_jul b

ON b.sales_month BETWEEN a.date - interval '11 months' AND a.date

WHERE a.date BETWEEN '1993-01-01' AND '2020-12-01';| date | sales_month | sales |

|---|---|---|

| 1993-01-01 | 1992-07-01 | 2373 |

| 1993-02-01 | 1992-07-01 | 2373 |

| 1993-03-01 | 1992-07-01 | 2373 |

| 1993-04-01 | 1992-07-01 | 2373 |

| 1993-05-01 | 1992-07-01 | 2373 |

| 1993-06-01 | 1992-07-01 | 2373 |

| 1993-01-01 | 1993-01-01 | 2123 |

| 1993-02-01 | 1993-01-01 | 2123 |

| 1993-03-01 | 1993-01-01 | 2123 |

| 1993-04-01 | 1993-01-01 | 2123 |

jan_jul <-

retail_sales |>

filter(kind_of_business == "Women's clothing stores",

month(sales_month) %in% c(1, 7)) |>

select(sales_month, sales)

date_dim |>

mutate(date_start = date - months(11)) |>

inner_join(jan_jul,

join_by(between(y$sales_month, x$date_start, x$date))) |>

select(date, sales_month, sales) |>

collect(n = 3) |>

kable()| date | sales_month | sales |

|---|---|---|

| 1993-01-01 | 1992-07-01 | 2373 |

| 1993-02-01 | 1992-07-01 | 2373 |

| 1993-03-01 | 1992-07-01 | 2373 |

date_dim |>

mutate(date_start = date - months(11)) |>

inner_join(jan_jul,

join_by(between(y$sales_month, x$date_start, x$date))) |>

group_by(date) |>

summarize(moving_avg = mean(sales, na.rm = TRUE),

records = n()) |>

collect(n = 3) |>

kable()| date | moving_avg | records |

|---|---|---|

| 1993-01-01 | 2248 | 2 |

| 1993-02-01 | 2248 | 2 |

| 1993-03-01 | 2248 | 2 |

WITH sales_months AS (

SELECT distinct sales_month

FROM retail_sales

WHERE sales_month between '1993-01-01' and '2020-12-01')

SELECT a.sales_month, avg(b.sales) as moving_avg

FROM sales_months a

JOIN retail_sales b

on b.sales_month between

a.sales_month - interval '11 months' and a.sales_month

and b.kind_of_business = 'Women''s clothing stores'

GROUP BY 1

ORDER BY 1

LIMIT 3;| sales_month | moving_avg |

|---|---|

| 1993-01-01 | 2672.083 |

| 1993-02-01 | 2673.250 |

| 1993-03-01 | 2676.500 |

sales_months <-

retail_sales |>

filter(between(sales_month,

as.Date('1993-01-01'),

as.Date('2020-12-01'))) |>

distinct(sales_month)

sales_months |>

mutate(month_start = sales_month - months(11)) |>

inner_join(retail_sales,

join_by(between(y$sales_month, x$month_start, x$sales_month)),

suffix = c("", "_y")) |>

filter(kind_of_business == "Women's clothing stores") |>

group_by(sales_month) |>

summarize(moving_avg = mean(sales, na.rm = TRUE)) |>

arrange(sales_month) |>

collect(n = 3) |>

kable()| sales_month | moving_avg |

|---|---|

| 1993-01-01 | 2672.083 |

| 1993-02-01 | 2673.250 |

| 1993-03-01 | 2676.500 |

3.3.2 Calculating Cumulative Values

SELECT sales_month, sales,

sum(sales) OVER w AS sales_ytd

FROM retail_sales

WHERE kind_of_business = 'Women''s clothing stores'

WINDOW w AS (PARTITION BY date_part('year', sales_month)

ORDER BY sales_month)

LIMIT 3;| sales_month | sales | sales_ytd |

|---|---|---|

| 2017-01-01 | 2454 | 2454 |

| 2017-02-01 | 2763 | 5217 |

| 2017-03-01 | 3485 | 8702 |

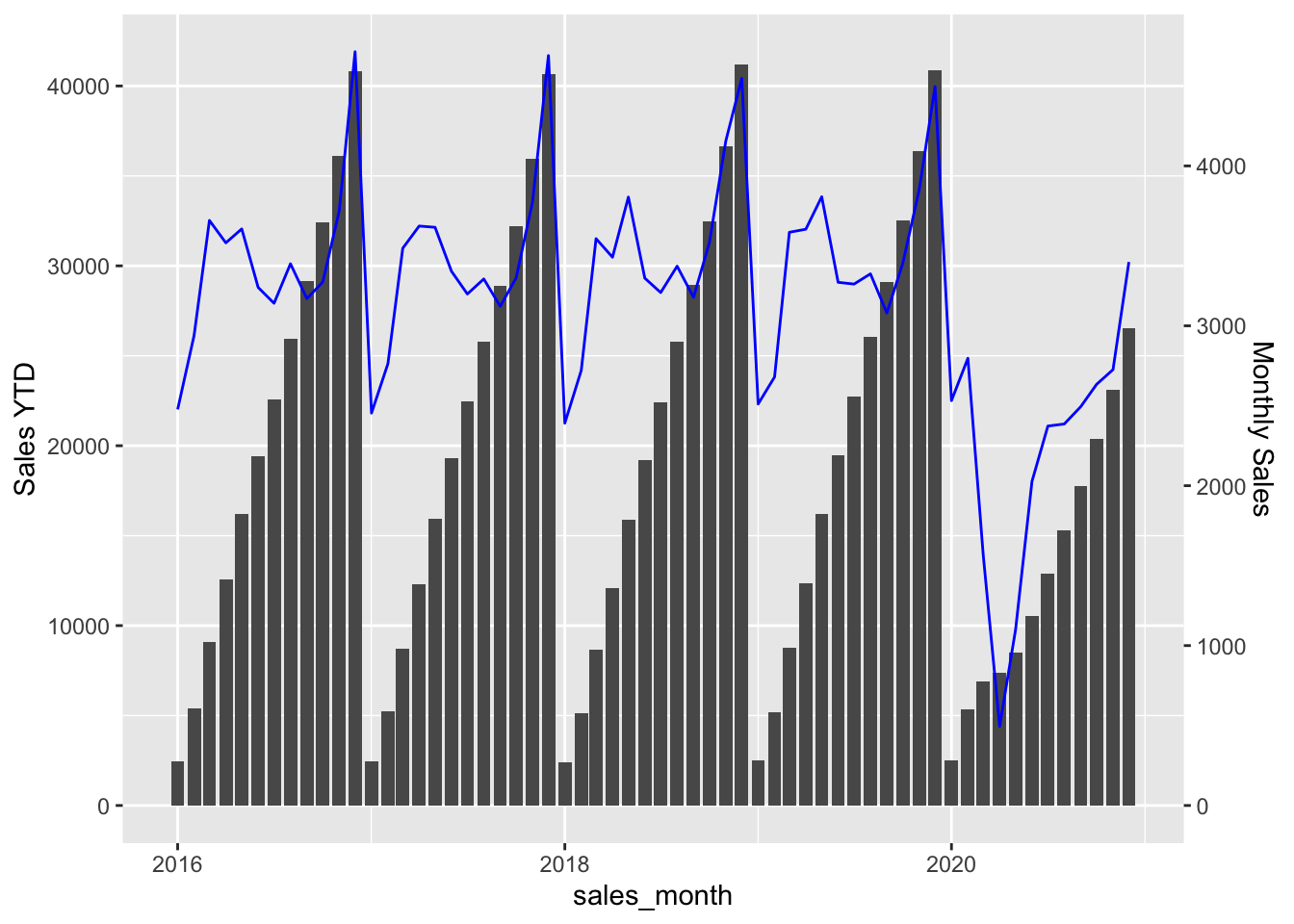

ytd_sales <-

retail_sales |>

filter(kind_of_business == "Women's clothing stores") |>

mutate(year = year(sales_month)) |>

group_by(year) |>

window_order(sales_month) |>

mutate(sales_ytd = cumsum(sales)) |>

ungroup() |>

select(sales_month, sales, sales_ytd)

ytd_sales |>

filter(month(sales_month) %in% c(1:3, 12)) |>

collect(n = 6) |>

kable()| sales_month | sales | sales_ytd |

|---|---|---|

| 2017-01-01 | 2454 | 2454 |

| 2019-01-01 | 2511 | 2511 |

| 2017-02-01 | 2763 | 5217 |

| 2019-02-01 | 2680 | 5191 |

| 2017-03-01 | 3485 | 8702 |

| 2019-03-01 | 3585 | 8776 |

factor <- 40/4.5

ytd_sales |>

filter(year(sales_month) %in% 2016:2020) |>

ggplot(aes(x = sales_month, y = sales_ytd)) +

geom_bar(stat = "identity") +

geom_line(aes(y = sales * factor, colour = I("blue"))) +

scale_y_continuous(

"Sales YTD",

sec.axis = sec_axis(~ . / factor, name = "Monthly Sales")

)

SELECT a.sales_month, a.sales,

sum(b.sales) AS sales_ytd

FROM retail_sales a

INNER JOIN retail_sales b ON

date_part('year',a.sales_month) = date_part('year',b.sales_month)

AND b.sales_month <= a.sales_month

AND b.kind_of_business = 'Women''s clothing stores'

WHERE a.kind_of_business = 'Women''s clothing stores'

GROUP BY 1,2;| sales_month | sales | sales_ytd |

|---|---|---|

| 1992-12-01 | 4416 | 31815 |

| 1993-12-01 | 4170 | 32350 |

| 1992-11-01 | 2946 | 27399 |

| 1993-11-01 | 2923 | 28180 |

| 1992-10-01 | 2755 | 24453 |

| 1993-10-01 | 2713 | 25257 |

| 1992-09-01 | 2560 | 21698 |

| 1993-09-01 | 2622 | 22544 |

| 1992-08-01 | 2657 | 19138 |

| 1993-08-01 | 2626 | 19922 |

retail_sales_yr <-

retail_sales |>

mutate(year = year(sales_month))

retail_sales_yr |>

filter(kind_of_business == "Women's clothing stores") |>

inner_join(retail_sales_yr,

join_by(year, kind_of_business,

sales_month >= sales_month),

suffix = c("", "_y")) |>

group_by(sales_month, sales) |>

summarize(sales_ytd = sum(sales_y, na.rm = TRUE),

.groups = "drop") |>

filter(month(sales_month) %in% c(1:3, 12)) |>

collect(n = 6) |>

kable()| sales_month | sales | sales_ytd |

|---|---|---|

| 1992-02-01 | 1991 | 3864 |

| 1993-02-01 | 2005 | 4128 |

| 1994-02-01 | 1970 | 3756 |

| 1992-03-01 | 2403 | 6267 |

| 1993-03-01 | 2442 | 6570 |

| 1994-03-01 | 2560 | 6316 |

3.4 Analyzing with Seasonality

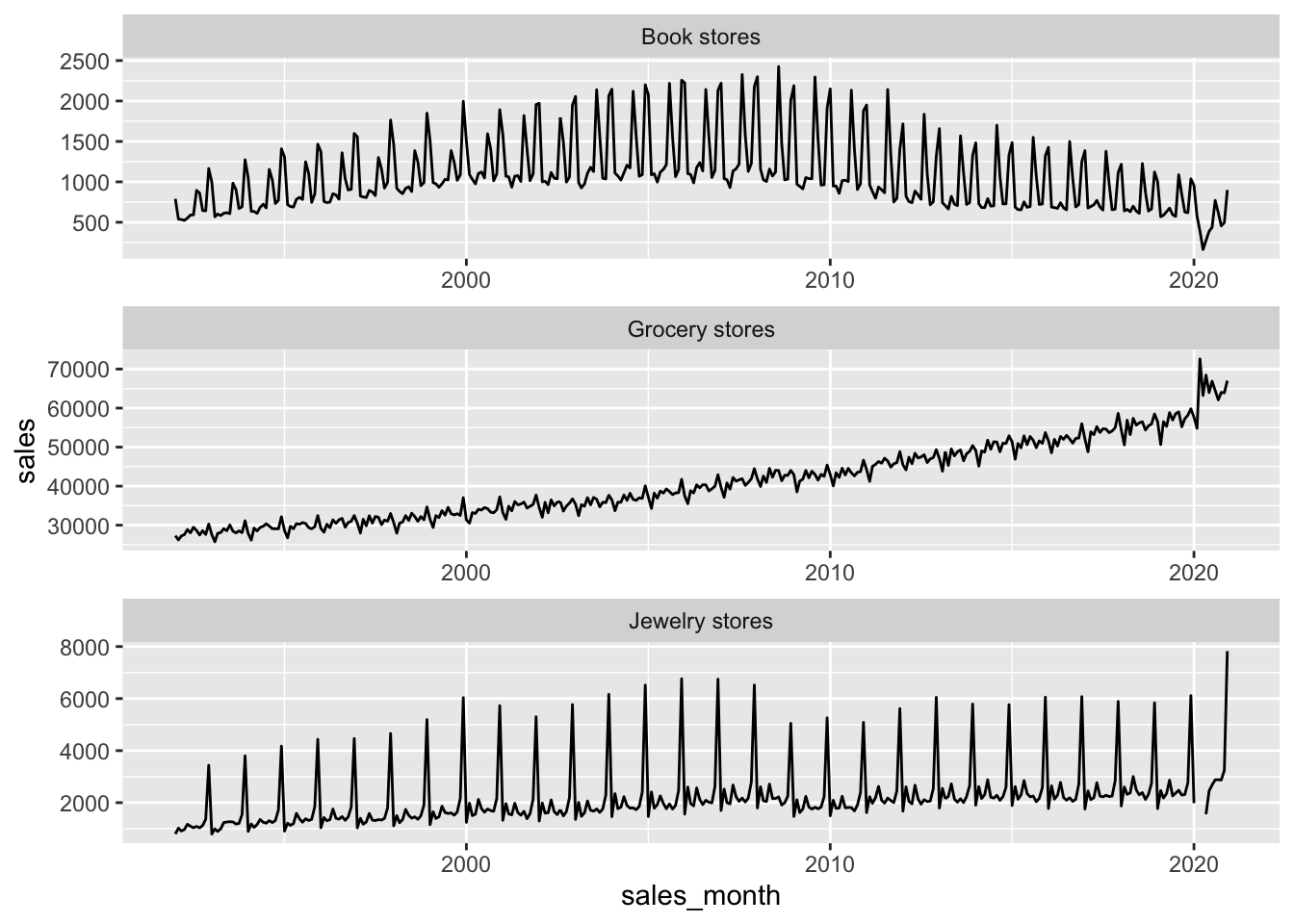

retail_sales |>

filter(kind_of_business %in% c("Jewelry stores",

"Book stores",

"Grocery stores")) |>

ggplot(aes(x = sales_month, y = sales)) +

geom_line() +

facet_wrap(vars(kind_of_business), nrow = 3, scales = "free")

3.4.1 Period-over-Period Comparisons: YoY and MoM

SELECT kind_of_business, sales_month, sales,

lag(sales_month) OVER w AS prev_month,

lag(sales) OVER w AS prev_month_sales

FROM retail_sales

WHERE kind_of_business = 'Book stores'

WINDOW w AS (PARTITION BY kind_of_business ORDER BY sales_month)| kind_of_business | sales_month | sales | prev_month | prev_month_sales |

|---|---|---|---|---|

| Book stores | 1992-01-01 | 790 | NA | NA |

| Book stores | 1992-02-01 | 539 | 1992-01-01 | 790 |

| Book stores | 1992-03-01 | 535 | 1992-02-01 | 539 |

| Book stores | 1992-04-01 | 523 | 1992-03-01 | 535 |

| Book stores | 1992-05-01 | 552 | 1992-04-01 | 523 |

| Book stores | 1992-06-01 | 589 | 1992-05-01 | 552 |

| Book stores | 1992-07-01 | 592 | 1992-06-01 | 589 |

| Book stores | 1992-08-01 | 894 | 1992-07-01 | 592 |

| Book stores | 1992-09-01 | 861 | 1992-08-01 | 894 |

| Book stores | 1992-10-01 | 645 | 1992-09-01 | 861 |

books_w_lag <-

retail_sales |>

filter(kind_of_business == 'Book stores') |>

group_by(kind_of_business) |>

window_order(sales_month) |>

mutate(prev_month = lag(sales_month),

prev_month_sales = lag(sales)) |>

select(kind_of_business,

sales_month, sales,

prev_month, prev_month_sales)

books_w_lag |>

collect(n = 3) |>

kable()| kind_of_business | sales_month | sales | prev_month | prev_month_sales |

|---|---|---|---|---|

| Book stores | 1992-01-01 | 790 | NA | NA |

| Book stores | 1992-02-01 | 539 | 1992-01-01 | 790 |

| Book stores | 1992-03-01 | 535 | 1992-02-01 | 539 |

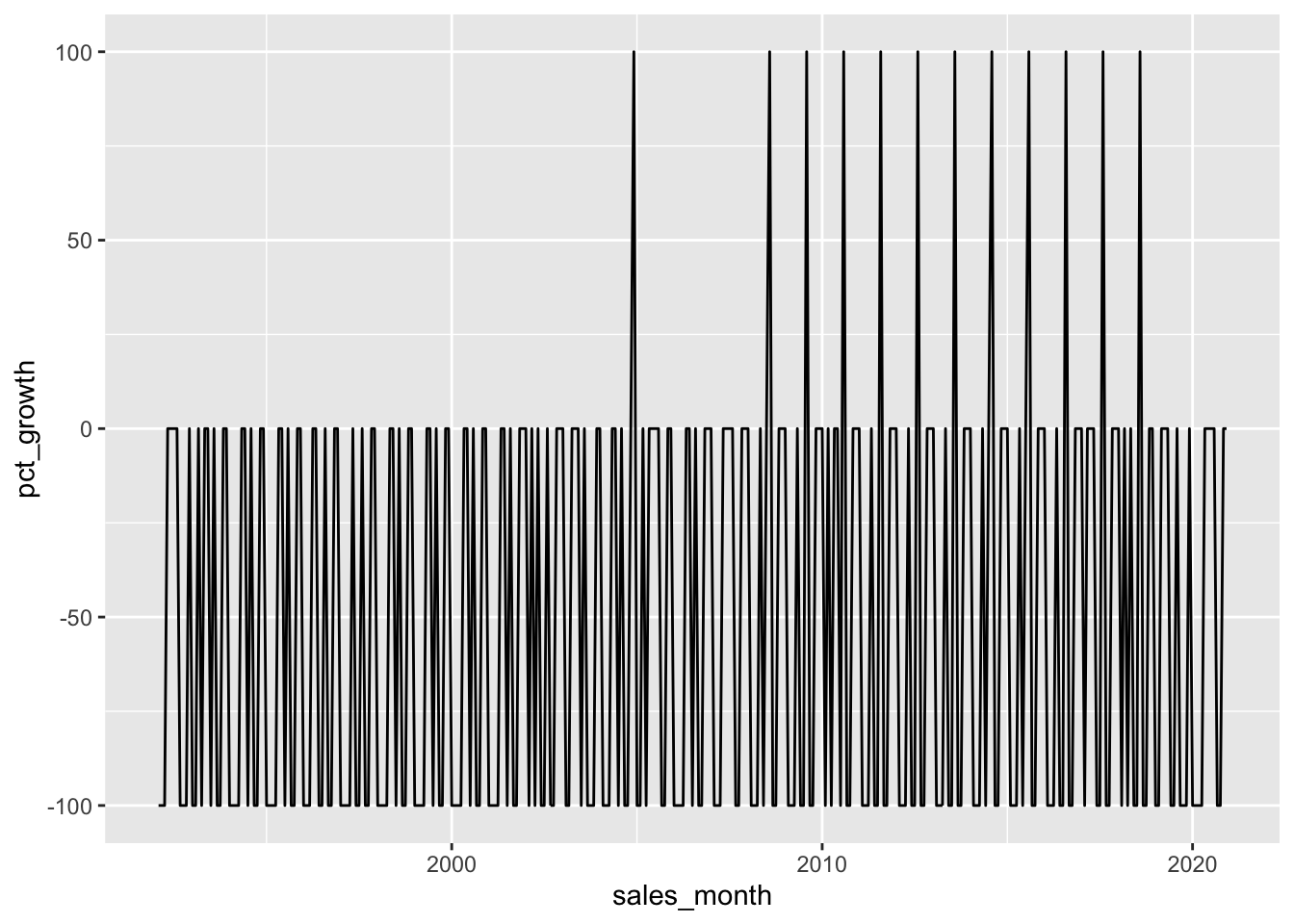

books_monthly <-

books_w_lag |>

mutate(pct_growth = (sales / prev_month_sales - 1) * 100) |>

select(-prev_month, -prev_month_sales)books_yearly <-

retail_sales |>

filter(kind_of_business == 'Book stores') |>

mutate(sales_year = year(sales_month)) |>

group_by(sales_year) |>

summarize(yearly_sales = sum(sales, na.rm = TRUE),

.groups = "drop") |>

window_order(sales_year) |>

mutate(prev_year_sales = lag(yearly_sales),

pct_growth = (yearly_sales/prev_year_sales - 1) * 100)

books_yearly |>

collect(n = 3) |>

kable(digits = 2)| sales_year | yearly_sales | prev_year_sales | pct_growth |

|---|---|---|---|

| 1992 | 8327 | NA | NA |

| 1993 | 9108 | 8327 | 0 |

| 1994 | 10107 | 9108 | 0 |

books_monthly |>

filter(!is.na(pct_growth)) |>

ggplot(aes(x = sales_month, y = pct_growth)) +

geom_line()

3.4.2 Period-over-Period Comparisons: Same Month Versus Last Year

books_lagged_year_month <-

retail_sales |>

filter(kind_of_business == 'Book stores') |>

mutate(month = month(sales_month)) |>

group_by(month) |>

window_order(sales_month) |>

mutate(prev_year_month = lag(sales_month),

prev_year_sales = lag(sales)) |>

ungroup() |>

select(sales_month, sales, prev_year_month, prev_year_sales)

books_lagged_year_month |>

filter(month(sales_month) <= 2,

year(sales_month) <= 1994) |>

arrange(sales_month) |>

collect(n = 6) |>

kable()| sales_month | sales | prev_year_month | prev_year_sales |

|---|---|---|---|

| 1992-01-01 | 790 | NA | NA |

| 1992-02-01 | 539 | NA | NA |

| 1993-01-01 | 998 | 1992-01-01 | 790 |

| 1993-02-01 | 568 | 1992-02-01 | 539 |

| 1994-01-01 | 1053 | 1993-01-01 | 998 |

| 1994-02-01 | 635 | 1993-02-01 | 568 |

books_lagged_year_month |>

mutate(dollar_diff = sales - prev_year_sales,

pct_diff = dollar_diff/prev_year_sales * 100) |>

select(-prev_year_month, -prev_year_sales) |>

filter(month(sales_month) == 1) |>

collect(n = 3) |>

kable(digits = 2)| sales_month | sales | dollar_diff | pct_diff |

|---|---|---|---|

| 1992-01-01 | 790 | NA | NA |

| 1993-01-01 | 998 | 208 | 0 |

| 1994-01-01 | 1053 | 55 | 0 |

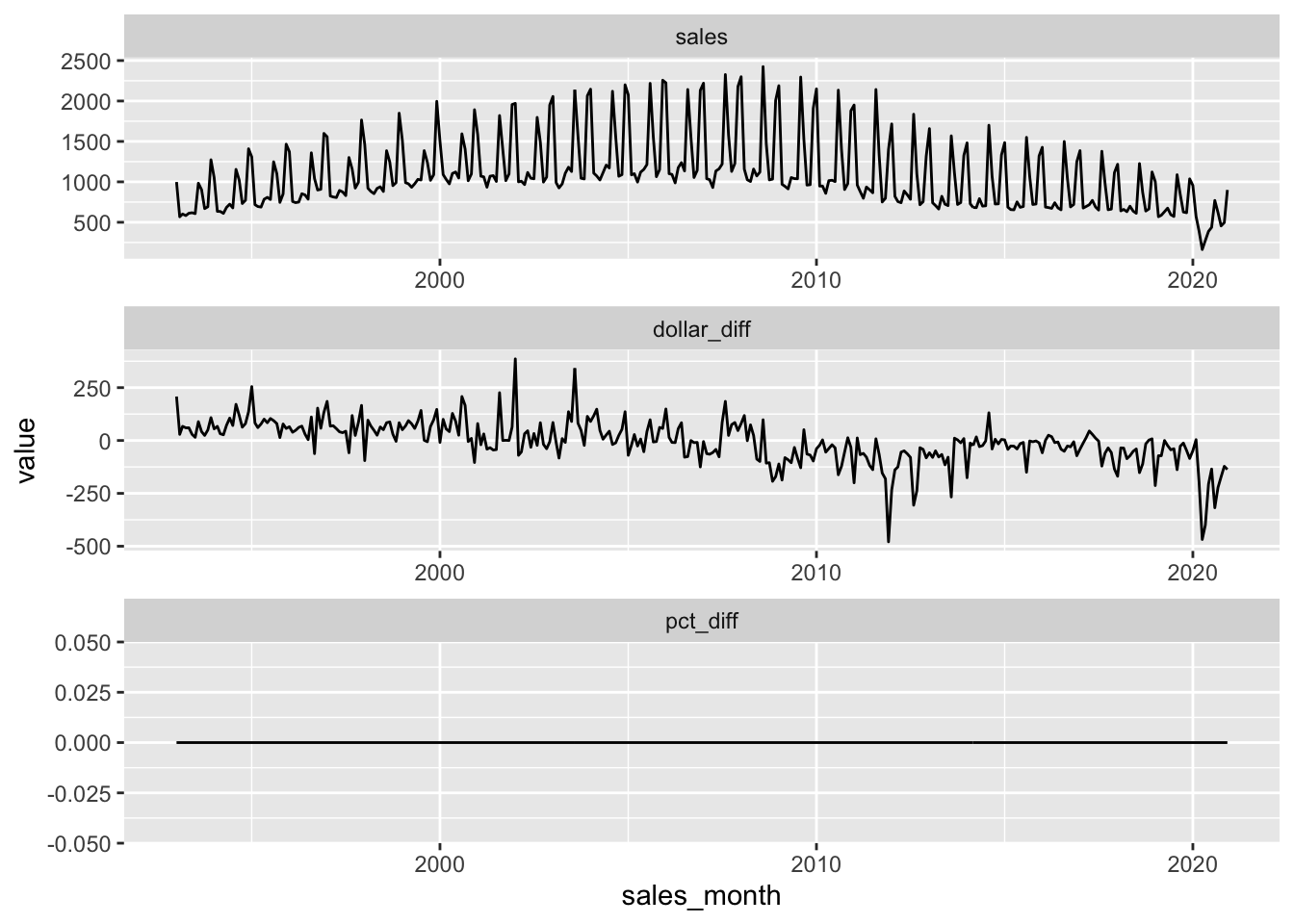

books_lagged_year_month |>

filter(!is.na(prev_year_sales)) |>

mutate(dollar_diff = sales - prev_year_sales,

pct_diff = dollar_diff/prev_year_sales * 100) |>

select(sales_month, sales, dollar_diff, pct_diff) |>

pivot_longer(-sales_month) |>

collect() |>

mutate(name = fct_inorder(name)) |>

ggplot(aes(x = sales_month, y = value)) +

geom_line() +

facet_wrap(. ~ name, nrow = 3, scales = "free")

With PostgreSQL, we would use to_char(sales_month,'Month'); with DuckDB, the equivalent is monthname(sales_month). To use an approach that works with either backend, we draw on arguments to the lubridate function month().

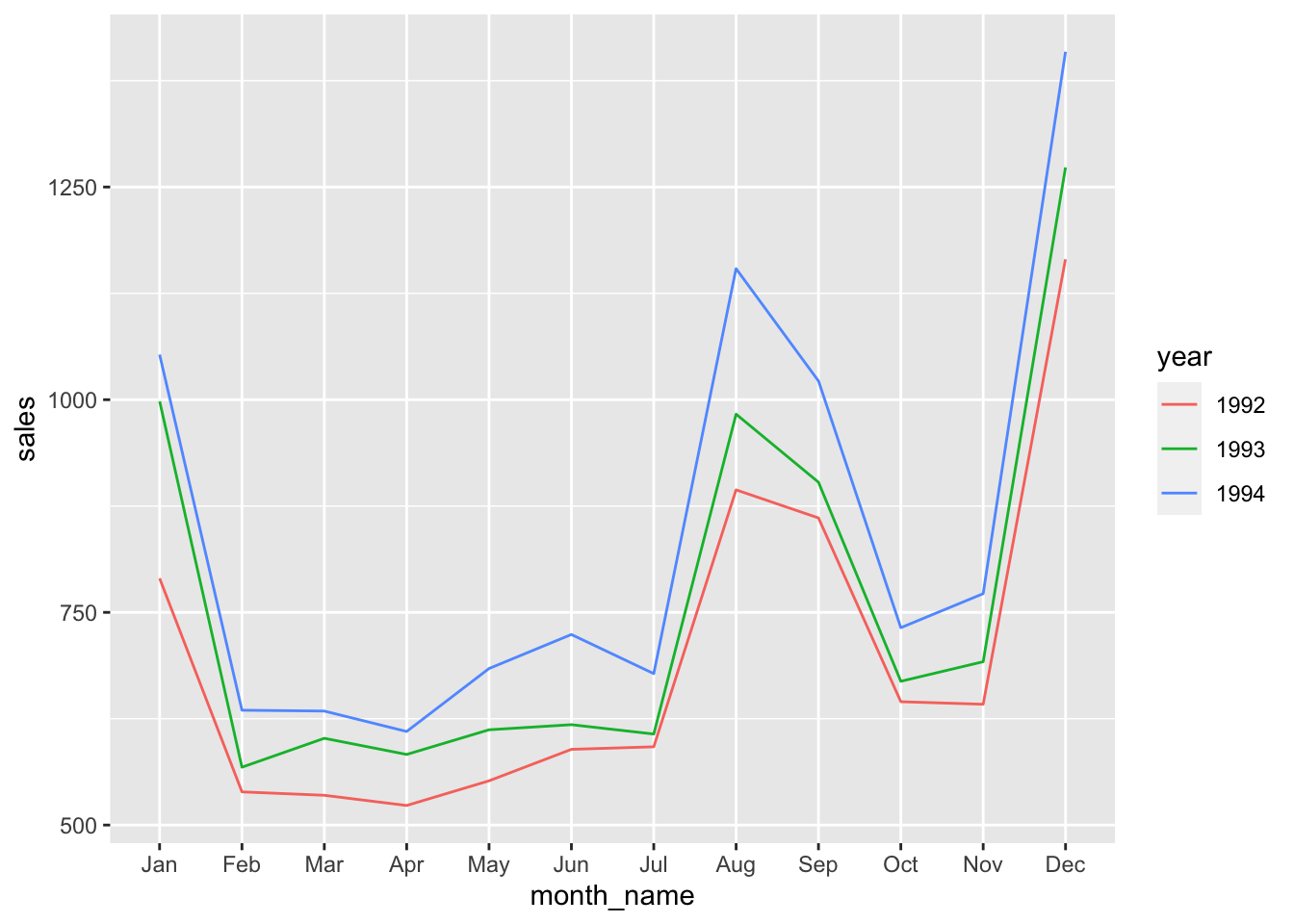

sales_92_94 <-

retail_sales |>

filter(kind_of_business == 'Book stores',

year(sales_month) %in% 1992:1994) |>

select(sales_month, sales) |>

mutate(month_number = month(sales_month),

month_name = month(sales_month,

label = TRUE, abbr = FALSE),

year = as.integer(year(sales_month)))

sales_92_94 |>

pivot_wider(id_cols = c(month_number, month_name),

names_from = year,

names_prefix = "sales_",

values_from = sales) |>

kable()| month_number | month_name | sales_1992 | sales_1993 | sales_1994 |

|---|---|---|---|---|

| 1 | January | 790 | 998 | 1053 |

| 2 | February | 539 | 568 | 635 |

| 3 | March | 535 | 602 | 634 |

| 4 | April | 523 | 583 | 610 |

| 5 | May | 552 | 612 | 684 |

| 6 | June | 589 | 618 | 724 |

| 7 | July | 592 | 607 | 678 |

| 8 | August | 894 | 983 | 1154 |

| 9 | September | 861 | 903 | 1022 |

| 10 | October | 645 | 669 | 732 |

| 11 | November | 642 | 692 | 772 |

| 12 | December | 1165 | 1273 | 1409 |

sales_92_94 |>

collect() |>

mutate(year = factor(year),

month_name = month(month_number, label = TRUE)) |>

ggplot(aes(x = month_name, y = sales,

group = year, colour = year)) +

geom_line()

3.4.3 Comparing to Multiple Prior Periods

prev_three <-

retail_sales |>

filter(kind_of_business == 'Book stores') |>

mutate(month = month(sales_month)) |>

group_by(month) |>

window_order(sales_month) |>

mutate(prev_sales_1 = lag(sales, 1),

prev_sales_2 = lag(sales, 2),

prev_sales_3 = lag(sales, 3)) |>

ungroup()

prev_three |>

filter(month == 1) |>

select(sales_month, sales, starts_with("prev_sales")) |>

collect(n = 5) |>

kable()| sales_month | sales | prev_sales_1 | prev_sales_2 | prev_sales_3 |

|---|---|---|---|---|

| 1992-01-01 | 790 | NA | NA | NA |

| 1993-01-01 | 998 | 790 | NA | NA |

| 1994-01-01 | 1053 | 998 | 790 | NA |

| 1995-01-01 | 1308 | 1053 | 998 | 790 |

| 1996-01-01 | 1373 | 1308 | 1053 | 998 |

prev_three |>

mutate(avg_prev_three = (prev_sales_1 +

prev_sales_2 +

prev_sales_3)/3,

pct_of_prev_3 = 100 * sales/avg_prev_three) |>

select(sales_month, sales, pct_of_prev_3) |>

filter(month(sales_month) == 1,

year(sales_month) %in% c(1995:1997, 2017:2019)) |>

collect(n = 10) |>

kable(digits = 2)| sales_month | sales | pct_of_prev_3 |

|---|---|---|

| 1995-01-01 | 1308 | 138.12 |

| 1996-01-01 | 1373 | 122.63 |

| 1997-01-01 | 1558 | 125.17 |

| 2017-01-01 | 1386 | 94.63 |

| 2018-01-01 | 1217 | 84.95 |

| 2019-01-01 | 1004 | 74.74 |

prev_three_win <-

retail_sales |>

filter(kind_of_business == 'Book stores') |>

mutate(month = month(sales_month)) |>

group_by(month) |>

window_order(sales_month) |>

window_frame(-3, -1) |>

mutate(avg_prev_three = mean(sales, na.rm = TRUE)) |>

ungroup() |>

mutate(pct_of_prev_3 = 100 * sales/avg_prev_three)prev_three_win |>

select(sales_month, sales, pct_of_prev_3) |>

filter(month(sales_month) == 1,

year(sales_month) %in% c(1995:1997, 2017:2019)) |>

collect(n = 10) |>

kable(digits = 2)| sales_month | sales | pct_of_prev_3 |

|---|---|---|

| 1995-01-01 | 1308 | 138.12 |

| 1996-01-01 | 1373 | 122.63 |

| 1997-01-01 | 1558 | 125.17 |

| 2017-01-01 | 1386 | 94.63 |

| 2018-01-01 | 1217 | 84.95 |

| 2019-01-01 | 1004 | 74.74 |

3.5 Persistent storage

Having created a database connection, we can write the local data frame to the database using (say) copy_to().

We could specify temporary = FALSE if we wanted the data to be there permanently.3

3.5.1 Using PostgreSQL

3.5.2 Read-only databases

In some cases, you will have access to a database, but no write privileges for that database. In such a case, copy_inline() can be useful.4 Note that it seems you cannot interrogate a table created using copy_inline() using SQL, though it will behave in most respects just like a table created using copy_to() when using dbplyr. It is useful to note that copy_inline() is probably not a good solution if your data are hundreds of thousands of rows or more because the table is effectively turned into literal SQL.

retail_sales_alt <- copy_inline(db, retail_sales_local)3.5.3 Closing the database connection

dbDisconnect(db, shutdown=TRUE)I requested a tweak to

dplyrthat would have avoided the need to do this, but my request was denied. Given how awesomedbplyr/dplyris, I cannot complain.↩︎Though I will argue later that transitioning to

dplyr/dbplyris actually not difficult.↩︎Obviously this would not make sense if

dbis a connection to an in-memory database.↩︎I requested this function for a common use case I have. Thank you to the

dbplyrteam for making it happen.↩︎